Different Graph Algorithms for Different Questions

Before getting into the algorithms, we should review what makes up a graph and how it relates to the move graph for Color Walk. Most graph algorithms, beyond the basic BFS and DFS, are designed to work on graphs with weighted edges. A weight on an edge means the edge has a value associated with it that can be thought of as a cost of traversing that edge from one vertex to another. A vertex is the same thing as what we've been calling, so far, a node, because we've been talking about the game's move graphs as trees. Internal nodes in a tree are connected by edges and end in leaf nodes. The root node of the move tree is just another vertex, and in the case of a generic graph, any vertex in the graph could potentially be the root node of a corresponding tree. We simply have to pick which vertex we want to use as the root, and the rest of the tree branches out from there. In the case of our move graph, we pick the vertex with the starting board position as the root of the tree.

We can further compare trees and graphs by noticing that a tree is a special type of graph, namely a directed, acyclic graph (DAG). Directed means that edges are traversed in one direction, and in the move graph that direction is from vertices corresponding to earlier moves to vertices corresponding to later moves. More precisely, the vertices are the board positions that are arrived at for each move, and the edges represent the moves that change one board position into another board position by removing blocks.

Acyclic means that a vertex cannot be revisited by following some finite number of edges without backtracking (since the DAG has directed edges, we can't backtrack anyway). The move graph isn't quite acyclic because each vertex has an edge to itself for the same move as the move that results in that board position. Some vertexes also have edges that come back to themselves because the corresponding move doesn't remove any blocks, resulting in the same board position. These cycles can easily be detected and eliminated, as we saw in the BFS and DFS algorithms, so the move tree can be thought of as a DAG, even if it isn't in the strictest sense, by removing these cyclic edges.

Returning to the idea of edge weights, most graph algorithms work by optimizing the cost of traversing edges by their weights. A path consisting of the lowest sum of weights is considered the best path, and graph algorithms will work to find those paths. We have already been assigning weights to the edges in our greedy algorithms. We called them heuristics instead of weights, but they amount to the same thing. The heuristic values associated with each move can be considered the weights assigned to each corresponding edge in the graph. Different heuristics are different weight functions. For example, in the basic greedy algorithm the heuristic was the number of blocks removed on a given move. The number of blocks removed would be the weight of the edge corresponding to that move. So far all of the weights we've used have been positive, but it is feasible to have negative weights on graph edges as well. Some graph algorithms will work with negative weights, while others require all positive (or all negative) weights.

Graph algorithms can be classified into four different types, based on what they solve for a given graph. Minimum spanning tree algorithms will find the minimum set of edges, taking weights into account, that connects all of the nodes in the graph. Basically, these algorithms remove the most costly and unnecessary edges from the graph to create a tree still containing all vertices from the original graph. Since our move graph is already a tree and we're interested in finding the minimum path from one vertex to another, minimum spanning tree algorithms won't be too useful for us, but they do solve a large class of problems, including automatic routers for circuit board or integrated circuit layouts, for example.

The shortest paths algorithms are the ones we're most interested in. These algorithms find the lowest-cost path from a source vertex to all other vertices in the graph. It is interesting that solving this problem is asymptotically as fast as finding the shortest path between two specific nodes, but we are working with an enormous graph, so we'll have to make some modifications to Dijkstra's algorithm, the shortest path algorithm we'll use, to make it tractable for the move graphs. Part of that modification will be stopping early when we find the shortest path to the end vertex we're looking for.

Another type of algorithm that's similar to the shortest paths algorithms is the all-pairs shortest paths algorithm. These algorithms will efficiently find the shortest paths between all pairs of vertices in a graph. This problem seems much larger than the single-source shortest path problem, but it can actually be solved faster than iterating through every vertex and running Dijkstra's algorithm. We don't need to do this for our problem, so we won't be looking into this type of algorithm here.

The last type is the maximum flow algorithms. These algorithms take a pair of vertices and find the maximum rate that something can move through the graph from one vertex to the other without overflowing any of the weights in the graph. The weights can be thought of as capacities for each edge between vertices, and this type of problem has all kinds of applications such as electricity in wires, liquids in pipes, traffic flow, assembly lines, and much more. Yet, it's not useful for our immediate problem, although it's good to know that these algorithms exist.

Single-Source Shortest Path Algorithms

As described just a moment ago, single-source shortest path algorithms will find the shortest path from a source vertex to all other vertices in the graph. Let's ignore for the moment that this is a colossal problem for our move graphs, with well over 440 vertices for most boards even after trimming out all cyclical edges. Instead, think about what the graph looks like. It's a tree with a single root node, our source vertex, that spreads out at each level by a factor of four.

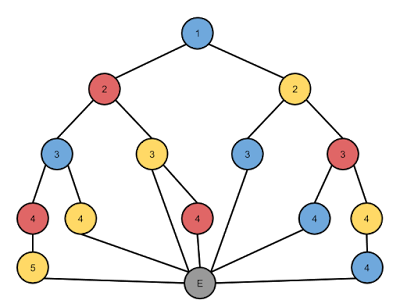

At some point the branches in the exponentially expanding tree will start reaching leaf nodes, but these leaf nodes are, in fact, all the same vertex—an empty board. That means at some point every branching path in the tree will end up at the same vertex with an enormous number of edges coming into it, and we want to find the one that comes from the path through the least number of vertices. This is a potentially simplifying insight that we'll have to keep in mind. Take the example sub-graph pictured below as an example, where there are three colors to choose from for moves, and each vertex is labeled with its move number. Eventually, every path of moves ends in the same place, at the end vertex.

The most straightforward way to find this path is to use the Bellman-Ford algorithm. This algorithm will sweep through every vertex in the graph, and for each vertex it will take each edge in the graph and relax it. You see, each edge has a source vertex, where the edge comes from, and a sink vertex, where the edge goes to. Each vertex will also have a distance associated with it that starts at infinity, unless it is the overall source vertex where the path starts, in which case the distance is zero. Relaxing an edge means that we compare the distance of the sink vertex with the distance of the source vertex plus the edge weight. If the sink distance is more, then the sink distance will be updated to the source distance plus the edge weight. The pseudo-JavaScript code for the Bellman-Ford algorithm looks like this:

function BellmanFord(graph) {

_.each(graph.vertices, function(vertex) {

_.each(graph.edges, function(edge) {

relax(edge);

});

});

}

function relax(edge) {

if (edge.sink.distance > edge.source.distance + edge.weight) {

edge.sink.distance = edge.source.distance + edge.weight;

edge.sink.previous = edge.source;

}

}This algorithm is dead simple, but also completely unworkable for our problem for two reasons. First, it requires us to generate and store the entire move graph before running the algorithm. This task has already been shown to be quite beyond the ability of any computer for the foreseeable future. Second, we have to run relax(edge) for every edge in the graph, and do that full sweep of edges for every vertex in the graph. In other words, this algorithm is O(V2) where V is the number of vertices. Such a processing feat is never going to happen. We need to find a better way.

One thing we should notice is that the Bellman-Ford algorithm is doing a ton of extra work to propagate distances through the graph. Most vertex distances will not change on any particular sweep of the edges because the distances around them haven't changed. The algorithm was designed to work with any type of graph and tolerate cycles, but the move graph has the added restriction of being a DAG. Using that restriction, we can improve the algorithm by traversing the graph in breadth-first order while relaxing edges.

function dagShortestPaths(graph) {

var vertices = new Queue();

vertices.enqueue(graph.root);

while (vertices.getLength() > 0) {

var vertex = vertices.dequeue();

_.each(vertex.edges, function(edge) {

relax(edge);

vertices.enqueue(edge.sink);

});

}

}This algorithm is a great improvement over Bellman-Ford, but we've run into a somewhat silly issue. This algorithm is basically BFS with edge weights added into the mix. Because edge weights are just an additional metric in the move graph and not a strict cost of traversing the edges, they become meaningless, and the algorithm will perform no better than BFS. It should be obvious that it would take at least as long to run, and if we allow it to run over the entire graph as defined, much longer. Remember, BFS stopped as soon as it found the empty board node.

This DAG shortest paths algorithm is great for smaller DAGs than what we're working with, and with DAGs that have true edge weights instead of weights that we're just using as heuristics. What we need is an algorithm that uses the edge weights in a way that dramatically reduces the number of vertices visited before the destination node is visited, and for that we need Dijkstra's algorithm.

Dijkstra's Algorithm

To implement Dijkstra's algorithm for the Color Walk move graph, we're going to need to revisit the the heuristics we would use for edge weights. Simply using the number of blocks removed for each edge (move) probably won't be good enough, but to see why, let's first look at an outline of the algorithm.

function Dijkstra(graph)

var vertices = new Queue();

vertices.enqueue(graph.root);

while(vertices.getLength() > 0) {

var vertex = vertices.extractMin();

_.each(vertex.edges, function(edge) {

relax(edge);

vertices.enqueue(edge.sink);

});

}

}When we were searching for the empty board node with BFS, we were searching in move order, meaning we searched all first moves before searching all second moves, before searching all third moves, and so on. Now with Dijkstra's algorithm, we could be searching moves out-of-order. We could be headed down a promising path on the twelfth move when the next vertex pulled out of the priority queue is actually for the tenth move because the previous 12-move path has gotten too long, and there are shorter paths with less moves at the top of the queue. We want to capture the property that the shortest path is the one with the least number of moves, but balance that with the idea that moves that remove more blocks are likely to be on the shortest path.

The problem with simply combining the move number for a vertex and the number of blocks removed for that vertex is that we want to minimize the former and maximize the latter. We can't mix the two goals. We need to pick either minimization or maximization for the priority queue to work. It doesn't much matter which way we choose, so we'll stick with minimization and make the values for number of blocks removed negative. Thus, the weight for any given edge is

weight = 1 - blocks_removed_on_move

This equation is true because each move will increase the number of moves by 1 and remove a certain number of blocks. To calculate the distance associated with a new vertex, v, we add the weight to the distance of the previous vertex, u:

distancev = distanceu + weightu,v

We can simplify this equation by noticing that the distance to any vertex is simply the accumulation of moves minus the accumulation of blocks removed, or:

distancev = move_number - blocks_removed

With that worked out, we can start implementing our version of Dijkstra's algorithm for Color Walk. First, we add the algorithm to the list of choices in the HTML input element and to the switch statement:

function Solver() {

// ...

this.init = function() {

// ...

$('#solver_type').change(function () {

switch (this.value) {

// ...

case 'dijkstra':

that.solverType = that.dijkstra;

that.metric = areaCount;

break;

default:

that.solverType = that.roundRobin;

break;

}

// ...

});

// ...

}; this.dijkstra = function() {

var vertices = new PriorityQueue({ comparator: function(a, b) { return a.cost - b.cost } });

vertices = addVertices(vertices, 1, null, blocks[0].cluster.blocks.length);

while (vertices.length > 0) {

var vertex = vertices.dequeue();

markers = vertex.markers;

if (vertices.length > 250000) {

doMarkedMoves();

vertices.clear();

} else {

vertices = addVertices(vertices, vertex.depth + 1, vertex.control, vertex.cleared);

}

vertex.markers = null;

}

}Because the vertices don't stay in inserted order, and we need to know the cost of vertices before adding them to the queue, we need to run checkGameBoard() on each vertex in addVertices(). (Check out this previous post on BFS for details on how it was implemented before.) This change simplifies the above function a bit, and other than what's already been described, we only need to make sure the queue doesn't get too big. If it reaches too big of a size, we're going to forget adding more vertices, do the moves corresponding to the current minimum vertex, clear the queue, and bail out. We'll come around again at the new move number and partially cleared board to try again to find the end-of-game vertex. Some boards will have so many similarly weighted paths that this tactic is necessary, otherwise the queue will get too large and cause a crash. Surprisingly, this doesn't happen too often, and the algorithm will be able to complete the search on most boards in a single sweep.

Now let's look at what's going on in addVertices(). The idea is simple. This function needs to run through all of the controls, checking to see how many blocks each control removes, calculating the cost of each of these new vertices, and adding the vertices to the queue. If it runs into the end-of-game vertex, it should stop. Here's what it looks like:

function addVertices(vertices, depth, prev_control, prev_cleared) {

var stop = false;

_.each(controls, function (control) {

if (control !== prev_control && !stop) {

var removed_blocks = control.checkGameBoard(depth, areaCount);

if (endOfGame()) {

doMarkedMoves();

vertices.clear();

stop = true;

} else if (removed_blocks - prev_cleared > 0) {

var markers_dup = markers.slice();

var cost = max_moves*depth - removed_blocks;

if (removed_blocks > 590) cost -= (max_moves - 5)*depth;

vertices.queue({markers: markers_dup,

depth: depth,

control: control,

cost: cost,

cleared: removed_blocks});

}

}

});Next, the check to make sure at least one block was removed on the current move before adding the new vertex to the queue needed to be correct. The correct calculation is the difference between the removed blocks after the move and those blocks that were cleared before that move, because areaCount() counts all of the marked blocks in markers, not just the ones marked on the current move. Therefore, I needed to pass prev_cleared, the number of previously cleared blocks, into addVertices() from the parent vertex, which means I needed to store the number of blocks removed on a move in that move's vertex for this whole calculation to work.

Another subtle point is that with vertices being pulled out of the queue in a different order than they were inserted, each set of markers needed to be copied instead of sharing the same copy among all of the child vertices from any given parent vertex. That means the markers.slice() line needed to get moved inside the each() loop.

Then, the basic cost function of move_number - blocks_removed didn't work too well. Why that is has to do with the scale of those two values and how they work together. Imagine getting rid of either one of them. If the cost function was only move_number, then every vertex with a smaller move number would be pulled out of the priority queue before any vertex with a larger move number. Dijkstra's algorithm would reduce to BFS in that case. If, on the other hand, the cost function was only -blocks_removed, then the vertex with the most blocks removed would always be pulled out of the queue first, reducing the algorithm to the greedy algorithm.

These three possible cost functions all lie on a continuum, with BFS on one end, the greedy algorithm on the other end, and move_number - blocks_removed somewhere in the middle. But move_number - blocks_removed is not the only intermediate option. Consider that move_number is on the order of 30-40 moves, and blocks_removed will always end in 600 for a 30x20 block board. The move_number has much less weight than blocks_removed, but we can add a scale factor to it. This scale factor is actually necessary to get good performance because otherwise it's possible, and indeed likely, that the minimum vertex pulled out of the queue at some point during the search will clear out a dozen or more blocks and end the game, but be several moves more than the minimum number of moves. We want to add weight to move_number, so we can use max_moves from the UI input as the scale factor for move_number (represented in the code as depth).

With the correct scale factor—found to be around 25—the performance of the algorithm is much better, but it then hits another snag. Near the end of the search, the algorithm hits a wall and starts churning on all of the other possibilities in the queue because the move_number gets larger than blocks_removed on the vertices closest to the end-of-game vertex. The algorithm kind of devolves into BFS again, and it would take forever backtracking to explore older paths in its history unless we can find a way of forcing it to choose the vertices close to the finish line. To force this behavior, if we're within 10 blocks of finishing, we'll reduce the scale factor to bump the priority of those vertices. Since we're within 10 blocks of finishing, there are likely only a few colors of blocks left, and we can move more towards the greedy algorithm on the spectrum to finish quickly.

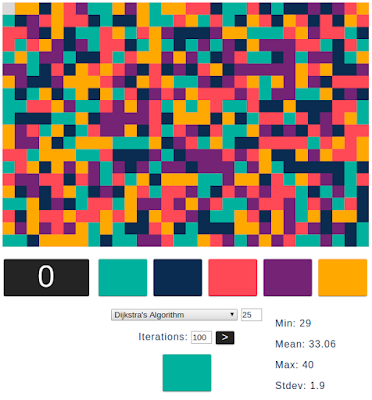

After getting all of these little, important details right, we can finally run a version of Dijkstra's algorithm that will run to completion without crashing and, hopefully, find some good solutions to the game boards. Let's see what happens when we run 100 iterations of this algorithm.

Dijkstra's Algorithm Results

We should keep in mind when looking at these results that the version of Dijkstra's algorithm that we're using is not the complete algorithm. We're intentionally stopping early when we find the first end-of-game vertex because if we let it run to completion, it would take an eternity to run, and in its current form would always run out of memory and crash. Taking that into account, here are the results with a move scale factor of 25:That result seems pretty good. How does it stack up to the other algorithms?

| Algorithm | Min | Mean | Max | Stdev |

|---|---|---|---|---|

| RR with Skipping | 37 | 46.9 | 59 | 4.1 |

| Random with Skipping | 43 | 53.1 | 64 | 4.5 |

| Greedy | 31 | 39.8 | 48 | 3.5 |

| Greedy Look-Ahead-2 | 28 | 37.0 | 45 | 3.1 |

| Greedy Look-Ahead-5 | 25 | 33.1 | 41 | 2.8 |

| Max Perimeter | 29 | 37.4 | 44 | 3.2 |

| Max Perimeter Look-Ahead-2 | 27 | 35.0 | 44 | 2.8 |

| Perimeter-Area Hybrid | 31 | 39.0 | 49 | 3.8 |

| Deep-Path | 51 | 74.8 | 104 | 9.4 |

| Path-Area Hybrid | 35 | 44.2 | 54 | 3.5 |

| Path-Area Hybrid Look-Ahead-4 | 32 | 38.7 | 45 | 2.7 |

| BFS with Greedy Look-Ahead-5 | 26 | 32.7 | 40 | 2.8 |

| DFS with Greedy Look-Ahead-5 | 25 | 34.8 | 43 | 3.9 |

| Dijkstra's Algorithm | 29 | 33.1 | 40 | 1.9 |

Well, the minimum number of moves for Dijkstra's algorithm was bested by GLA, BFS, and DFS, but the mean was only barely bested by BFS and the maximum was equal to the previous best, BFS. Look at the standard deviation, though. Dijkstra's algorithm gives consistently good solutions by a significant margin. The next best algorithm for giving consistent results is the deep path-area hybrid algorithm with look-ahead by 4, and that one performs 5.6 moves worse on average.

Dijkstra's algorithm definitely has some nice characteristics, but why doesn't it perform the best in all cases? The main reason is because of what was mentioned earlier: we're not running the algorithm to completion. Cutting it off early means that there are still potentially shorter paths in the priority queue that could be explored, but we stop searching the first time we find the end-of-game vertex. The weight function also plays a role here because we're trying to play a balancing act between optimizing the primary goal—the number of moves—and the secondary goal that helps focus the search—the number of blocks removed. If we increased the scale factor to add more weight to the number of moves, the search will take much longer, and it will run out of memory for the growing priority queue more often, requiring the algorithm to commit to some number of moves for the last minimum vertex it pulls out of the queue and start again from that point.

Part of what makes Dijkstra's algorithm so consistently effective is that it is another form of a greedy algorithm, but it keeps track of other promising paths while it pursues what looks like the best option at every moment. Each time through the while loop, it's looking at the current best possible next vertex in the path, and when the child vertices of that vertex are added to the queue, the next best vertex may not be one of those just added, but a vertex from a different promising path stored in the queue. The algorithm will remember all of the other paths it could take, and once the current path gets too expensive, it will switch to a cheaper one until that one also gets too expensive. This constant development of numerous good options for the shortest path turns out to be a very efficient way to find one that's quite short, if not the shortest.

Given the potential of Dijkstra's algorithm, we're not quite done exploring it. While implementing it, some options became apparent for making it perform better. We could look at another hybrid approach with the greedy algorithm, either running the greedy algorithm before or after Dijkstra's algorithm, or we could try running Dijkstra's algorithm twice—once to half the blocks removed and again to complete the game. Much experimentation is possible here, combined with varying the scale factor, to see if we can push Dijkstra's algorithm to beat GLA-5. We'll explore those options next time.

Article Index

Part 1: Introduction & Setup

Part 2: Tooling & Round-Robin

Part 3: Random & Skipping

Part 4: The Greedy Algorithm

Part 5: Greedy Look Ahead

Part 6: Heuristics & Hybrids

Part 7: Breadth-First Search

Part 8: Depth-First Search

Part 9: Dijkstra's Algorithm

Part 10: Dijkstra's Hybrids

Part 11: Priority Queues

Part 12: Summary

No comments:

Post a Comment